ENTROPY: its interpretations 1)

← Back

Entropy has been interpreted in quite various ways. Already BOLTZMANN, proposed in 1870 a statistical interpretation based on the collective behavior of the numerous molecules in a gas. In this view increasing entropy - at least in an isolated system - indicates a trend toward an ever increasing and irreversible disorder in the system, i.e. the disappearance of all local differences in the distribution of the elements. This implies the disappearance of internal organization.

According to J.de ROSNAY: "The notion of entropy is extremely abstract and thus very difficult to imagine. However some do consider it as intuitive. They only refer themselves mentally to real situations like disorder, squandering, loss of time or information. but, how can one really imagine a degraded energy? And why such a hierarchy of energies and this degradation?…

"Thanks to the mathematical relation between disorder and probability, it is possible to speak of an evolution towards a growing entropy through one or the other of the following expressions: "When left alone, an isolated system tends toward a state of maximum disorder" or, "When left alone, an isolated system tends towards a state of greater probability".

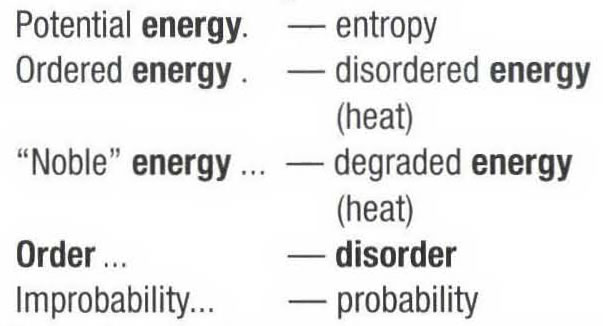

"These equivalent expressions may be resumed in the following table:

"The notion of entropy and the one of irreversibility, coming from the 2nd principle, had consequences of enormous impact on our world view. By breaking the vicious circle of repetitivity in which the ancients had enclosed themselves and by opposing to biological evolution, creator of order and organization, the notion of entropy did indirectly opened the road to a philosophy of becoming and progress" (1975, p.137).

However, inmediately thereafter ROSNAY points out that: "The image of the inexorable death of the universe, suggested by the 2nd. principle, did profoundly influence our philosophy, our morals, our worldview and even our art".

In fact, as expressed by J.L. ESPOSITO entropy can be considered as the subjective view of systems as considered by an observer and "… would be a measure of the degree of our knowledge of them" (1975, p.136). This is of course an extreme opinion, which nearly amounts to negate any objective reality to entropy.

Quoting L. BRILLOUIN (1959), de ROSNAY asks: "How is it possible to understand life when the whole world is directed by a law like the 2nd. principle of thermodynamics, which points towards death and annihilation" (1971, p.138).

While these questions are far from being thoroughly answered, some scientists at least have brought forth elements of solution (SZILARD, BRILLOUIN himself, PRIGOGINE), which one may resume – by excessive simplification, admittedly – saying that a compensation seems to exist to entropic disorganization, and that it is precisely more organization, locally concentrated, and progressing with time. But the price is always paid: This organization seems to grow in proportion to a parallel acceleration of entropy's increase.

Another viewpoint is developed by C. JOSLYN, who distinguishes thermodynamic entropy (S) and statistical entropy (H). He writes: "Thermodynamic entropy is a property of certain kinds of real systems: thermodynamic systems. Thermodynamic entropy is a measured property of such systems, and is understood in a differential relation to other quantities, such as heat, work, and temperature, which are also measured on thermodynamic systems.

"Thermodynamic entropy is thus a "content-full" concept specific to thermodynamic systems. The semantics of thermodynamic entropy is necessarily deeply embedded within the body of thermal physics and it must be interpreted in the context of all of thermodynamics… "

One wonders however: Are there "real" systems which are not thermodynamic systems?

JOSLYN pursues: "But unlike thermodynamic entropy, statistical entropy is a property of a probability distribution, not a real system. Statistical entropy is calculated from the numerical properties of this distribution… Statistical entropy is thus essentially a "context-free" concept. Whatever interpretation of the Þ1, (i.e. probability distribution) we make, need only adhere to the axioms of probability theory" (1991, p.622-3).

Categories

- 1) General information

- 2) Methodology or model

- 3) Epistemology, ontology and semantics

- 4) Human sciences

- 5) Discipline oriented

Publisher

Bertalanffy Center for the Study of Systems Science(2020).

To cite this page, please use the following information:

Bertalanffy Center for the Study of Systems Science (2020). Title of the entry. In Charles François (Ed.), International Encyclopedia of Systems and Cybernetics (2). Retrieved from www.systemspedia.org/[full/url]

We thank the following partners for making the open access of this volume possible: